All user input is error... ish.

I learned the hard way... user input is NOT always error. Thanks Elon.

Hey, I’m Jacob ✌️ Welcome to all of the product people who subscribed since last time! If you’re not a subscriber yet, I hope you’ll consider joining this band of like-minded folks who are all learning how to build better products. Questions or feedback? Drop ‘em here.

I want to tell you a short, 4-part story of a hard-won product lesson. One where good intentions went wrong.

Part 1: All Input is Error

On June 10, 2021, Tesla unveiled the Model S Plaid. It was alluringly sexy with its sleek interior, undisturbed by the rows of buttons and knobs that plague any normal vehicle you might drive.

They even went so far as to remove the steering wheel stalks entirely.

And I'm not sure if it was for the shock factor or simply the public ramblings of Elon's wild product brain, but his rationale for this change was almost as startling as the change itself.

"I think, generally, all user input is error."

—Elon Musk

You can listen to the 10s segment for yourself starting at 15:45.

In the ensuing days, many people had an issue with that phrase because it felt as though he was saying the user's input is wrong and that, presumably, an all-knowing, sentient AI would be able to make better decisions.

And while I wouldn't put it past him, it’s not what Elon meant. He goes on to clarify in the next sentence.

"If you have to do something that the car could have done already, that should be taken care of. The software should just do it."

—Elon Musk

To help bring a bit of mental clarity to the discussion, I would reframe it as "all user input is failure." If you have to provide input, the car hasn't done its job properly. It's failed. It should have anticipated your desires (note: YOUR desires, not the AI's desires) and met that desire automatically.

Okay. Just wanted to clear that up before we move on.

Back to the story.

I remember hearing that 5-word phrase for the first time and unraveling it in my head.

It was a dauntingly large vision for the future.

It was a rallying cry for engineers and designers.

It was bold proclamation of what Tesla stood for.

All in just 5 words. "All user input is error."

This all made sense to me. In the end, it was exactly what I had been trying to do for the last two years, I just hadn’t heard it summed up so succinctly before. Hearing that from one of my product heroes lit a fire within me to pursue it harder.

Part 2: Endless Off-beat Possibilities

My co-founder and I were building tools for the wildly underserved market of home service businesses. Existing tools were mediocre at best and we knew there had to be a better way.

In my idealistic fervor, I wanted to design a system that would automate some of the most tedious, repetitive parts of running a service business and, like most newly minted founders, was met with cold, harsh reality.

Every day, users would contact us asking for exceptions to the automated rules. Rules that I had so carefully designed for. Rules that I couldn't imagine why you would want to break.

Ahh, youth.

Now it’s a running joke that whatever we believe a user will do is probably not at all what the user will do. Memes like these are all too familiar:

As I spoke with more users, I discovered that circumstances in the field were much more fluid than I had originally known. To me, it seemed the process of booking a client, doing a job, invoicing for the job and getting paid was pretty straightforward.

But not every scenario fits the mold of a linear process with pre-defined inputs. Sometimes they're missing a piece of information, or they do something out of order, or they have to skip or repeat a step in a workflow... there are an endless combination of off-beat possibilities and I simply couldn't anticipate them all.

Part 3: Outsourced Error

There was a fatal flaw in my plan to abstract away the user input. A flaw that, unfortunately for me, Elon didn't feel the need to elaborate on in his Model S Plaid launch speech 🙄

Fatal flaw: I didn't start with any training data.

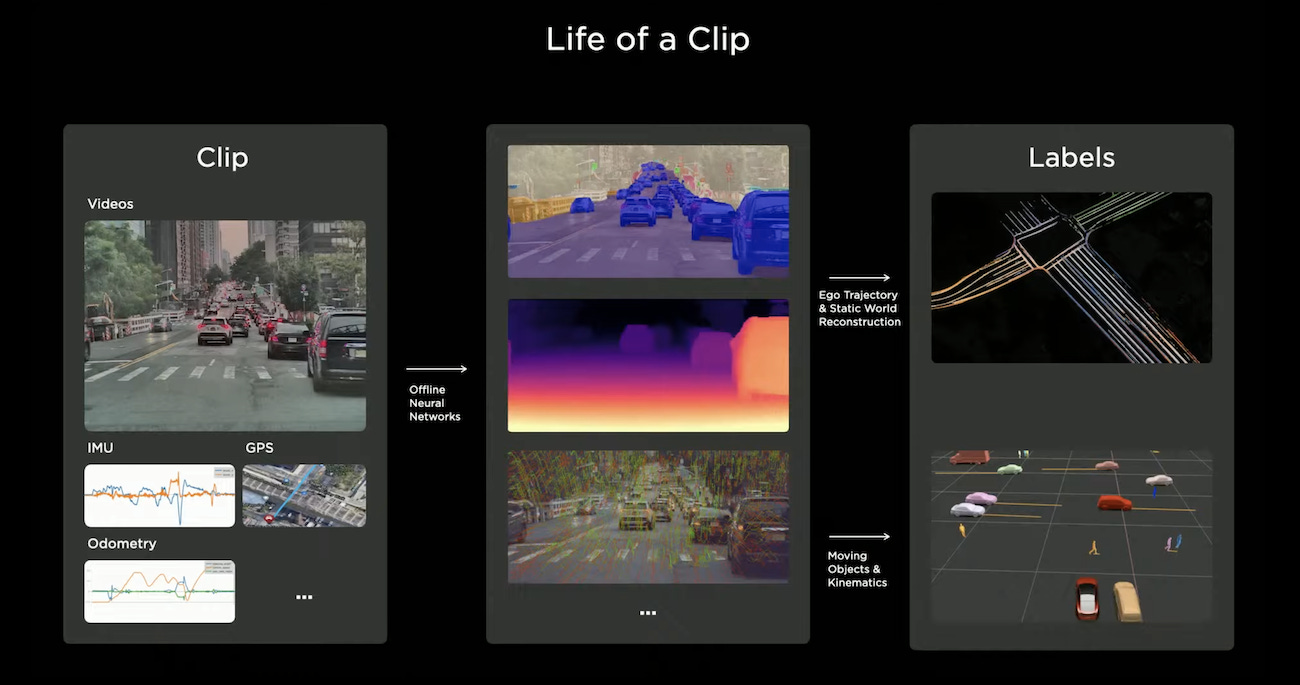

While Tesla had amassed an incomprehensibly large set of training data from their fleet of vehicles around the world, I was going off of anecdotes and conversations with a couple hundred users.

As you might imagine, I fell prey to one of the great ironies of automation: outsourced error.

"The important ironies of the classic approach to automation lie in the expectations of the system designers. [...] Designer errors can be a major source of operating problems."

—Lisanne Bainbridge, Ironies of Automation

In my quest to minimize user input, I forced my users to outsource the input to me which, in the end, is just a less accurate form of user input.

Ouch.

What I realized too late is that every operator runs their service business slightly differently. And even if two operators ran them the same way, edge cases have a nasty habit of popping up all the time.

For every new business and every edge case, there's a new set of outcomes that the product designer must account for which is impossible.

And the reality is that products that try to anticipate your needs and get it wrong are more annoying than products that don't anticipate your needs at all.

So while Tesla is ingesting massive data sets with robust machine learning systems and a huge engineering team, my company had... me.

And since I didn't have the engineering resources or data sets at my disposal to programmatically identify and address all of those edge cases, in a beautiful twist of irony, what I really needed was user input 😅

Classic.

Part 4: Give me input, or give me death

Let's revisit my adaptation of Elon's principle: all user input is failure.

Though swapping "error" for "failure" helped clarify the principle, there's still a lot of context missing. Context that I had to learn the hard way.

In reality, all user input is training data until the software becomes advanced enough to accurately anticipate your needs, at which point, all user input becomes failure.

But that's just not as catchy is it? :)

While Elon has been preaching his 5-word gospel, he’s also been hard at work behind the scenes getting every scenario as dialed in as possible. When it comes to vehicles on the road, you can never be too safe. They only automate when they’ve identified a “can’t miss” opportunity.

Since coming to this realization, I haven't been shy about providing my users with granular controls or asking them for input. And as we grow, we'll find the patterns and slowly begin abstracting away some of the input. But for now, giving users a choice on what to do next will get them to their destination faster than us trying to do it for them. This is the part of the story Scott Belsky refers to as the “messy middle.”

I’ll see you in the trenches.

—Jacob ✌️

❤️ Smash that heart!

If you enjoyed this article, smash that heart icon to show some love! 🙏

There is a saying in Chiropractic Philosophy that "Average is not normal". It this case, it's referring to ideas such as "normal" blood pressure being 120/80. That's not normal,...that's average and average will kill you in the right circumstances. Equating "user input" with us trying to manage the magnificent complexity of the body from the outside (ie. medicines meant to "average out" our blood pressure) then it is immediately apparent that average can kill you. The human body already has the innate intelligence to manage our blood pressure, on the fly, with no input needed from our educated intelligence. Anyway, just a thought.

Best one yet IMO. 🙌